Redis weekly update #7 - Full of keys

Wednesday, 25 August 10

I'm back after two weeks of vacation: the sea was wonderful, I refused to travel at all, it was time for the real relax, that is, doing as little as you can and enjoy your friends, family, and the summertime. I hope you had a good stop too... in the Redis IRC channel one day I read that in Europe we have guaranteed vacation days because of our weak european bodies :) but whatever is your continent don't miss the opportunity to stop a bit!

And what's cool about stopping is how nice it feels to start hacking again after a few days of not writing a single line of code, so you can see a number of commits already in the master branch of Redis, including fixes for old important bugs and other little improvements. I'm processing the open issues one after the other, and when I'll done, it will be time to release 2.0.0 as stable.

But in this weekly update I want to talk about something else, that is, memory efficiency.

In practice instead of using a real hash table, with all the overhead involved (the hash table itself, more pointers, redis objects for every field name and value) we store it as a single "blob" with prefixed-length strings. The speed will not suffer as we do this trick only for small fields and small number of elements and there is more cache locality, but the memory reduction is impressive.

After all if your overbook factor is 5 or 10, this is still O(1) in amortized time, and still very fast in practice.

We'll do this and save tons of memory? Yes, but this is not the right time to merge such a change as 2.2 is going to be already too big, and we need to focus on redis cluster ASAP. We need to wait a few more months. But... the good thing is, we can already implement it in the client library, using hashes.

This is how it works. If I want to store the key "foo", I do the following:

Now let's do some math:

so... make sure to change the following two parameters in redis.conf:

In the future we'll make this transparent changing the key space implementation to do this tricks automatically, but I guess client library hackers may want to implement a similar interface already. Note that since the hashes also provide the HINCRBY command it's possible to have tons of atomic counters using very little memory.

As you can guess there is another good effect: the more keys you have per hash, the more severe the advantage of VM will become, as you are going to have "big values".

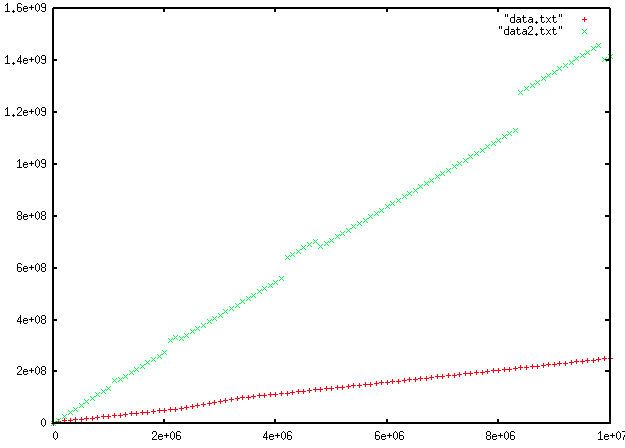

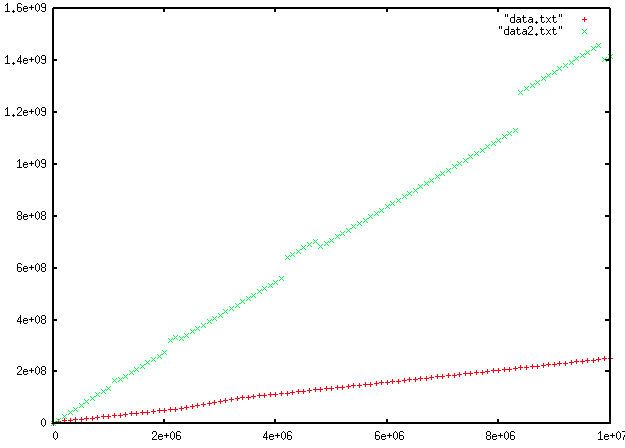

This time I want to end the article with a graph of the memory usage, as you can see the memory gain starts very early and is more or less linear (note: in X is number of keys, and in Y memory used):

I bet there are better schemas, and there is of course some drawback to address, so if you are a creative client lib hacker, have fun! ;)

And what's cool about stopping is how nice it feels to start hacking again after a few days of not writing a single line of code, so you can see a number of commits already in the master branch of Redis, including fixes for old important bugs and other little improvements. I'm processing the open issues one after the other, and when I'll done, it will be time to release 2.0.0 as stable.

But in this weekly update I want to talk about something else, that is, memory efficiency.

Why hashes do it better?

Hashes do it better! As you probably already know if you are following this blog, If you store an N (with N small) fields object as independent keys (one field per key), or the same object as an hash with five fields, the latter will require in the average five times less memory. No magic involved, simply hashes (and lists and sets in 2.2) are designed to be encoded in a much more efficient fashion when they are composed of a few elements.In practice instead of using a real hash table, with all the overhead involved (the hash table itself, more pointers, redis objects for every field name and value) we store it as a single "blob" with prefixed-length strings. The speed will not suffer as we do this trick only for small fields and small number of elements and there is more cache locality, but the memory reduction is impressive.

An hash table of hashes

Now as you can guess, this may be applied to the main Redis key space as well... instead of having an hash table where every slot has a single element, it is possible to cluster multiple key-value pairs in a single slot. It's like if you overbook the hash table five or ten times: this will result in collisions. What you do is to take advantage of this collisions, storing the colliding key-val paris in an efficient way (that is, linearly).After all if your overbook factor is 5 or 10, this is still O(1) in amortized time, and still very fast in practice.

We'll do this and save tons of memory? Yes, but this is not the right time to merge such a change as 2.2 is going to be already too big, and we need to focus on redis cluster ASAP. We need to wait a few more months. But... the good thing is, we can already implement it in the client library, using hashes.

Redis Hash Layer

I called this trick RedisHL, as what I'm doing here is using Redis hashes as an additional layer to create an high level hash table on top of the redis key space.This is how it works. If I want to store the key "foo", I do the following:

- I compute the hex SHA1 sum of "foo", that is: 0beec7b5ea3f0fdbc95d0dd47f3c5bc275da8a33.

- I use the first four digits "0bee" to locate the (real) key where to store this key.

- I store this key as a field of the hash at key "0bee".

HSET 0bee foo barAnd so forth.

Show me the code

The code is a trivial wrapper to Redis-rb library:require 'rubygems' require 'redis' require 'digest/sha1'

class RedisHL def initialize(r) @redis = r end

def get_hash_name(key) Digest::SHA1.hexdigest(key.to_s)[0..3] end

def exists(key) @redis.hexists(get_hash_name(key)) end

def [](key) @redis.hget(get_hash_name(key),key) end

def []=(key,value) @redis.hset(get_hash_name(key),key,value) end

def incrby(key,incr) @redis.hincrby(get_hash_name(key),key,incr) end end

r = Redis.new() rr = RedisHL.new(r)

rr[:foo] = "bar" puts(rr[:foo])

Now let's do some math:

- The first four digits of a SHA1 digest means we have 65536 hashes.

- If we populate the system with one million keys, every hash will have more or less 15 elements per hash. Note that the distribution will near to perfect because of the SHA1 statistical properties.

- If we populate the system with ten million keys, every hash will have more or less 152 keys.

so... make sure to change the following two parameters in redis.conf:

hash-max-zipmap-entries 512 hash-max-zipmap-value 512With this change you can use this library to store 30 millions of keys without concerns.

Memory gain

What is the memory gain here? It is simply huge!- Space needed to store 10 million keys as normal keys: 1.7 GB (RSS)

- Space needed to store 10 million keys with this trick: 300 MB (RSS)

In the future we'll make this transparent changing the key space implementation to do this tricks automatically, but I guess client library hackers may want to implement a similar interface already. Note that since the hashes also provide the HINCRBY command it's possible to have tons of atomic counters using very little memory.

As you can guess there is another good effect: the more keys you have per hash, the more severe the advantage of VM will become, as you are going to have "big values".

This time I want to end the article with a graph of the memory usage, as you can see the memory gain starts very early and is more or less linear (note: in X is number of keys, and in Y memory used):

I bet there are better schemas, and there is of course some drawback to address, so if you are a creative client lib hacker, have fun! ;)

Commenti

1

25 Aug 10, 16:23:48

Glad you had a great vacation! Looking forward to Redis 2.0 and putting it in production :)

25 Aug 10, 16:42:28

@Aaron: thanks! Redis 2.0.0 is at this point really near. The same for the feature freeze of Redis 2.2.

27 Aug 10, 11:55:07

Wondering why the exists function only checks whether an entry exists with the same four first digits in the hash.

That entry might have different key. So the check seems incomplete.

That entry might have different key. So the check seems incomplete.

30 Aug 10, 19:17:31

I've done the same for python

import hashlib,redis

sha1 = hashlib.sha1

class RedisWrapper(redis.Redis):

def get_hash_name(self,key):

return sha1(key).hexdigest()[:4]

def exists(self,key):

return self.hexists(self.get_hash_name(key))

def get(self,key):

return self.hget(self.get_hash_name(key),key)

def set(self,key,value):

self.hset(self.get_hash_name(key),key,value)

def incrby(self,key,incr):

self.hincrby(self.get_hash_name(key),key,incr)

import hashlib,redis

sha1 = hashlib.sha1

class RedisWrapper(redis.Redis):

def get_hash_name(self,key):

return sha1(key).hexdigest()[:4]

def exists(self,key):

return self.hexists(self.get_hash_name(key))

def get(self,key):

return self.hget(self.get_hash_name(key),key)

def set(self,key,value):

self.hset(self.get_hash_name(key),key,value)

def incrby(self,key,incr):

self.hincrby(self.get_hash_name(key),key,incr)

30 Aug 10, 20:37:29

How can we do this for an mget command? We would need to issue multiple hmget's to get all the keys. Is there an easier way?

23 Sep 10, 15:28:30

I've posted a link to the google group thread for the answer to my question. It always bugs me when a google search returns a question with no answer.

http://groups.google.com/group/redis-db/browse_thr...

Enjoy

http://groups.google.com/group/redis-db/browse_thr...

Enjoy

03 Oct 10, 19:53:41

I am curious about how you calculated these numbers?

-----------------------------------------------

* Space needed to store 10 million keys as normal keys: 1.7 GB (RSS)

* Space needed to store 10 million keys with this trick: 300 MB (RSS)

-----------------------------------------------

Thanks!

-----------------------------------------------

* Space needed to store 10 million keys as normal keys: 1.7 GB (RSS)

* Space needed to store 10 million keys with this trick: 300 MB (RSS)

-----------------------------------------------

Thanks!

25 Feb 11, 12:46:36

if enable vm ,may be cause a problem:

cold key and hot key may in the same zipmapSet, cold key can not be swapped .

any body can resolve my guess?

cold key and hot key may in the same zipmapSet, cold key can not be swapped .

any body can resolve my guess?